Hearing Is Believing

By Richard M. Stern and Nelson Morgan

NOTE: This is an overview of the entire article, which appeared in the November 2012 issue of the IEEE Signal Processing Magazine.

Click here to read the entire article.

The article is an extensive review of feature extraction methods for Automatic Speech Recognition (ASR) related to hearing and sound perception processes in humans and other mammals. It provides insight into the signal processing that takes place in the cochlea and autory nerve, brain stem and auditory cortex. The then describes research work which has used this knowledge to prove machine speech recognition.

The authors explain that the common framework for state-of-the-art ASR systems has been fairly stable for about two decades now: a transformation of a short-term power spectral estimate is computed every 10 ms and then is used as an observation vector for Gaussian-mixture-based Hidden Markov Models (HMMs) that have been trained on as much data as possible, augmented by prior probabilities for word sequences generated by smoothed counts from many examples. The most probable word sequence is chosen, taking into account both acoustic and language probabilities. While these systems have improved greatly over time, much of the improvement has arguably come from the availability of more data, more detailed models to take advantage of the greater amount of data, and larger computational resources to train and evaluate the models. Still other improvements have come from the increased use of discriminative training of the models. Additional gains have come from changes to the front end (e.g., normalization and compensation schemes), and/or from adaptation methods that could be implemented either in the stored models or through equivalent transformations of the incoming features. Even improvements obtained through discriminant training [such as with the minimum phone error (MPE) method] have been matched in practice by discriminant transformations of the features [such as feature MPE (fMPE)].

In present practice, ASR does not achieve the quality of human transcription, even with high Signal/Noise ratios. The gap becomes even larger in the presence of higher noise, reverberation, etc. A number of techniques that are being explored to improve ASR performance are discussed.

As a background to understanding the techniques being used and proposed for improved ASR, the article begins with an explanation of auditory processes occurring in the cochlea, such as frequency selectivity and amplitude compression. It moves on to neural and cortical effects – such as sensitivity to inter-aural time delay. Finally, psychophysical effects, such as auditory thresholding and loudness perception are addressed.

Following this tour thorough the biological aspects of mammalian sound recognition, the article reports on existing and emerging techniques used in ASR that are related to biological understandings.

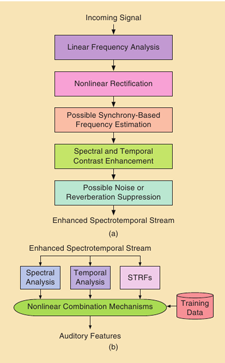

Figure (a) Generalized organization of contemporary feature extraction procedures that are analogous to auditory periphery function. Not all blocks are present in all feature extraction approaches, and the organization may vary. (b) Processing of the spectrotemporal feature extraction by spectral analysis, temporal analysis, or the more general case of (possibly many) Spectrotemporal Receptive Fields (STRFs). These can incorporate long temporal support, can comprise a few or many streams, and can be combined simply or with discriminative mechanisms incorporating labeled training data.

The article concludes with a review of auditory modelling, which is emerging as an aid to feature extraction, as advances in computer capabilities make this modelling more feasible.

ABOUT THE AUTHORS

Richard M. Stern (rms@cs.cmu.edu) is currently a professor in the Department of Electrical and Computer Engineering and in the Language Technologies Institute as well as a lecturer in the School of Music at CMU, where he has been since 1977. He is well known for work in robust speech recognition and binaural perception, and his group has developed algorithms for statistical compensation for noise and filtering, missing-feature recognition, multimicrophone techniques, and physiologically motivated feature extraction. He is a Fellow of the Acoustical Society of America and the International Speech Communication Association (ISCA). He was the ISCA Distinguished Lecturer in 2008-2009 and the general chair of Interspeech 2006.

Nelson Morgan (morgan@icsi.berkeley.edu) has led speech research at ICSI since 1988 and is a professor in residence at the University of California, Berkeley. He has over 200 publications, including a speech and audio textbook coauthored with Ben Gold (with a new edition prepared with Dan Ellis of Columbia). He was formerly co-editor-in-chief of Speech Communication. He received the 1997 IEEE Signal Processing Magazine Best Paper Award. He jointly developed RASTA processing with Hynek Hermansky, and hybrid HMM/MLP systems with H. Bourlard. He is a Fellow of the IEEE and ISCA.