If We Can See It, We Can Fix It: From Medical Imaging to Guidance Environments for Computer-Assisted Interventions

by Cristian A. Linte and Ziv Yaniv

Minimally invasive interventions are surgical procedures that are conducted through small incisions or natural orifices, under reduced tissue exposure. This approach to medical interventions is motivated by improved outcomes, primarily due to the reduction in trauma to the patient previously associated with reaching the target. While the less invasive approach is beneficial to patients, it has introduced new challenges for the physicians. The limited availability of direct visualization of the surgical scene and organs being treated, and often lack thereof, require significant reliance on medical imaging and computer-assisted navigation. This article provides a concise overview of the field of computer-assisted guidance and navigation for minimally invasive interventions and current research trends in the domain.

The past several decades have marked a shift from open interventions to minimally invasive therapy. Despite the benefit of ample direct physical and visual access to the organs in traditional procedures, physicians realized that the large incisions and accompanying collateral damage were not only a significant cause of complications, added trauma, and morbidity, but also contributed to prolonged recovery and delayed return of the patients to their daily activities. These findings led to the introduction and wide acceptance of 1) endoscopic and laparoscopic imaging as a means to significantly reduce the incision needed to access internal organs [1], as well as 2) the interventional radiology sub-specialty that enabled therapy delivery via catheters and percutaneous access under X-ray fluoroscopy or ultrasound image guidance [2]. Both developments represent a critical shift from the traditional direct view to image-guided visualization.

Although these developments have revolutionized clinical practice, the path to minimal invasiveness has not been challenge free, especially considering that the outcome of an intervention depends on the clinician’s ability to recreate the surgical scene by mentally fusing high quality, often 3D, pre-operative images, with lower quality, typically 2D real-time images of the anatomy acquired—almost always in a different patient position and physiological conditions. As a consequence, surgical navigation (i.e., “GPS-like”) systems were independently developed for use in several clinical specialties, including neuro- and orthopaedic surgery, endoscopy, and interventional radiology. These clinical advances have recently led to the realization that all these distinct medical disciplines share a common set of technologies, resulting in a symbiotic, cross-disciplinary treatment paradigm [3].

The goal of interventional guidance environments is to allow physicians to plan and execute minimally invasive interventions by increasing their spatial navigation abilities via fusion of information acquired pre- and intra-operatively, and displaying the relevant guidance information in an intuitive manner. The key technologies underlying development of these systems include: medical imaging, data visualization, image segmentation and registration, tracking systems, and software engineering [4]–[6]. While existing guidance environments do improve the spatial navigation abilities of physicians, there are still challenges associated with their use.

Challenges and Lessons Learned

Right Place, Right Time, Right View

To ensure optimal integration into procedure workflow, guidance environments must feature accurate identification and targeting of the surgical sites, near real-time performance, and display only the relevant information for the specific task. When treating moving organs, such as in thoraco-abdominal procedures, near real-time or real-time non-rigid registration and organ tracking are currently an active area of research [7]. Identification of the specific stage of an intervention or use of other cues to determine the most appropriate information to be displayed have been investigated in certain settings [8], [9] and are still an active area of interest. It should be noted that holistic evaluation of these systems is still lacking. A recent study evaluating endoscopic navigation [10] showed improved accuracy when using an augmented-reality guidance environment, but also exposed that users suffered from inattentional blindness—they were less likely to identify unexpected visible findings in their field of view. Automated detection of anomalies in the field of view is an avenue of research that can potentially mitigate this issue.

Catering to the Clinician

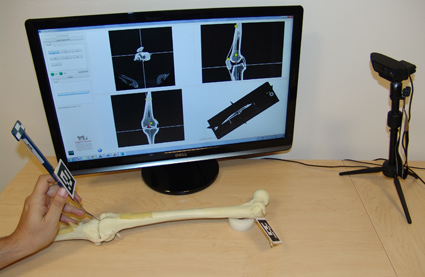

While newly-developed technology is intended to address current clinical challenges, new ways of conducting routine procedures are often more challenging for clinicians. As a consequence, developers of navigation environments should strive to simplify the workflows and interaction with their systems. Currently the use of these navigation aids is often not intuitive and involves a steep learning curve. Training is thus conducted in dedicated high-end facilities such as the IRCAD center described in [3]. While high quality training for clinicians requires considerable financial investment, the fundamentals of navigation can be taught using very low-cost simulations [11]. A free navigation simulator that mimics many of the functionalities of commercial guidance systems is shown in Figure 1 and is available online.

Figure 1: Low cost tabletop simulation environment for navigation guidance. Sawbone model used as mock patient and tracking is performed by a webcam. Interface shows standard radiological views corresponding to the location of the tip of the pointer tool.

Impacting Clinical Translation

To justify integration into the clinical workflow, new technology must be evaluated to ensure it addresses a real clinical need without impeding traditional workflows, while improving the overall procedure outcome, and providing a cost-effective solution to the currently-accepted treatment approaches. From a cost perspective, it appears that guidance environments are no “silver bullet”; they appear to be cost effective [12] for some disciplines and procedures, but not for others [13]. Developers of image guidance systems need to consider cost-effectiveness, as the setup and disassembly of a streamlined system can greatly reduce operating room time, leading, in turn, to significantly lower costs associated with the utilization of such equipment.

Looking Forward

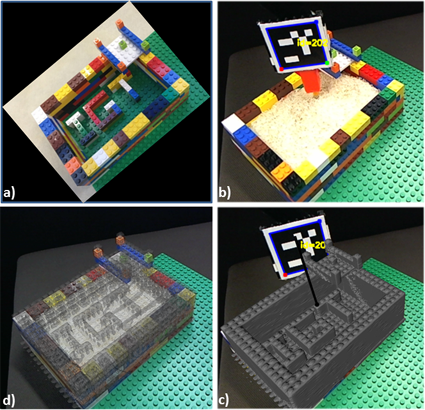

Mixed reality environments for computer-assisted intervention provide clinicians with an alternative, less invasive means for accurate and intuitive visualization by replacing or augmenting their direct view of the real world with computer graphics information (Figure 2) available thanks to the processing and manipulation of pre- and intra-operative medical images.

Figure 2: Simplified diagram illustrating the use of augmented reality to display critical information (i.e., the “IGT” letters within the IGT LEGO phantom shown in a) to the user under conditions when such information is occluded – when the LEGO phantom is filled with rice shown in b, by superimposing a virtual model of the LEGO phantom generated from a CT scan (c) onto the real video view of the physical LEGO phantom (b) and updated in real time according to the camera pose (d).

An ideal system best positioned to make an impact in current clinical practice seamlessly blends diagnostic, planning, and guidance information into a single visualization environment displayed at the interventional site, provides accurate registration and tracking, high-fidelity visualization and information display, enables smooth data transfer, and fits within the traditional interventional suites without interfering with current workflows and typical equipment.

While some image guidance systems leverage highly complex infrastructure whose acquired information often overwhelms or confuses the user, other systems empower the user to select the relevant information. Although addressing human-computer interaction in image guidance is an exception rather than the norm, such challenges have been explored, for example, when selecting the optimal visualization strategy for percutaneous procedures in interventional radiology [14].

Finally, the broader scope of image guidance has seen technologies that aim beyond minimal invasiveness and toward providing non-invasive treatment. One such technology, which has made inroads into clinical care, is based on the use of ultrasound imaging to deliver therapy, either in the form of thermal therapy or as a means to perform controlled drug delivery [16]. Although this technology has many potential benefits, it currently relies on interventional MRI to guide the therapy and is thus still a costly solution with limited widespread adoption.

Bibliography

- G. S. Litynski, “Endoscopic surgery: the history, the pioneers,” World J Surg, vol. 23, no. 8, pp. 745–753, 1999.

- G. M. Soares and T. P. Murphy, “Clinical Interventional Radiology: Parallels with the Evolution of General Surgery,” Semin Interv. Radiol, vol. 22, no. 1, pp. 10–14, 2005.

- J. Marescaux and M. Diana, “Inventing the future of surgery,” World J. Surg., vol. 39, no. 3, pp. 615–622, Mar. 2015.

- Z. Yaniv and K. Cleary, “Image-Guided Procedures: A review,” Image Science and Information Systems Center, Georgetown University, CAIMR TR-2006-3, Apr. 2006.

- T. Peters and K. Cleary, Eds., Image-Guided Interventions Technology and Applications. Springer, 2008.

- K. Cleary and T. M. Peters, “Image-guided interventions: technology review and clinical applications,” Annu Rev Biomed Eng, vol. 12, pp. 119–142, 2010.

- L. Maier-Hein, A. Groch, A. Bartoli, S. Bodenstedt, G. Boissonnat, P.-L. Chang, N. T. Clancy, D. S. Elson, S. Haase, E. Heim, J. Hornegger, P. Jannin, H. Kenngott, T. Kilgus, B. Muller-Stich, D. Oladokun, S. Rohl, T. R. dos Santos, H.-P. Schlemmer, A. Seitel, S. Speidel, M. Wagner, and D. Stoyanov, “Comparative Validation of Single-Shot Optical Techniques for Laparoscopic 3-D Surface Reconstruction,” IEEE Trans. Med. Imaging, vol. 33, no. 10, pp. 1913–1930, Oct. 2014.

- D. Katić and others, “Context-aware Augmented Reality in laparoscopic surgery,” Comput Med Imaging Graph, vol. 37, no. 2, pp. 174–182, 2013.

- D. Katić, P. Spengler, S. Bodenstedt, G. Castrillon-Oberndorfer, R. Seeberger, J. Hoffmann, R. Dillmann, and S. Speidel, “A system for context-aware intraoperative augmented reality in dental implant surgery,” Int. J. Comput. Assist. Radiol. Surg., vol. 10, no. 1, pp. 101–108, Jan. 2015.

- B. J. Dixon, M. J. Daly, H. Chan, A. D. Vescan, I. J. Witterick, and J. C. Irish, “Surgeons blinded by enhanced navigation: the effect of augmented reality on attention,” Surg. Endosc., vol. 27, no. 2, pp. 454–461, Feb. 2013.

- Ö. Güler and Z. Yaniv, “Image-guided navigation: a cost effective practical introduction using the Image-Guided Surgery Toolkit (IGSTK),” Conf. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Annu. Conf., vol. 2012, pp. 6056–6059, 2012.

- Y. Lai, K. Li, J. Li, and S. X. Liu, “Cost-effectiveness of navigated radiofrequency ablation for hepatocellular carcinoma in china,” Int. J. Technol. Assess. Health Care, vol. 30, no. 4, pp. 400–408, Oct. 2014.

- J. Margier, S. D. Tchouda, J.-J. Banihachemi, J.-L. Bosson, and S. Plaweski, “Computer-assisted navigation in ACL reconstruction is attractive but not yet cost efficient,” Knee Surg. Sports Traumatol. Arthrosc. Off. J. ESSKA, Jan. 2014.

- E. Varga, P. M. T. Pattynama, and A. Freudenthal, “Manipulation of mental models of anatomy in interventional radiology and its consequences for design of human-computer interaction,” Cogn Tech Work, pp. 1–17, 2012.

- K. Hynynen, “MRIgHIFU: A Tool for Image-Guided Therapeutics,” J Magn Reson Imaging, vol. 34, no. 3, pp. 482–493., 2011.

Contributors

Cristian A. Linte, PhD received his doctoral degree in Biomedical Engineering from Western University in Ontario, Canada. He is an Assistant Professor in the Department of Biomedical Engineering and the Chester F. Carlson Center for Imaging Science at Rochester Institute of Technology.

Cristian A. Linte, PhD received his doctoral degree in Biomedical Engineering from Western University in Ontario, Canada. He is an Assistant Professor in the Department of Biomedical Engineering and the Chester F. Carlson Center for Imaging Science at Rochester Institute of Technology.

Read more

Ziv Yaniv, PhD received his doctoral degree in Computer Science from the Hebrew University of Jerusalem in 2004. He is a Senior Scientist in the Office of High Performance Computing and Communications at the National Library of Medicine and the National Institutes of Health.

Ziv Yaniv, PhD received his doctoral degree in Computer Science from the Hebrew University of Jerusalem in 2004. He is a Senior Scientist in the Office of High Performance Computing and Communications at the National Library of Medicine and the National Institutes of Health.

Read more

Shabana Sayed is a ESHRE certified Senior clinical Embryologist and IVF Laboratory Director at Klinikk Hausken. Her primary areas of expertise are within embryo selection and optimization of laboratory procedures for IVF.

Shabana Sayed is a ESHRE certified Senior clinical Embryologist and IVF Laboratory Director at Klinikk Hausken. Her primary areas of expertise are within embryo selection and optimization of laboratory procedures for IVF.

Subhamoy Mandal is a DAAD Ph.D. scholar with the Institute of Biological and Medical Imaging at the Technische Universität München and Helmholtz Zentrum München. His research focuses on visual quality enhancement in multispectral optoacoustic tomgraphy and translational molecular imaging applications.

Subhamoy Mandal is a DAAD Ph.D. scholar with the Institute of Biological and Medical Imaging at the Technische Universität München and Helmholtz Zentrum München. His research focuses on visual quality enhancement in multispectral optoacoustic tomgraphy and translational molecular imaging applications.

Cristian A. Linte is an Assistant Professor in the Department of Biomedical Engineering and the Chester F. Carlson Center for Imaging Science at Rochester Institute of Technology.

Cristian A. Linte is an Assistant Professor in the Department of Biomedical Engineering and the Chester F. Carlson Center for Imaging Science at Rochester Institute of Technology.

Ziv Yaniv is a Senior Scientist in the Office of High Performance Computing and Communications at the National Library of Medicine and the National Institutes of Health.

Ziv Yaniv is a Senior Scientist in the Office of High Performance Computing and Communications at the National Library of Medicine and the National Institutes of Health.