The power of small brains

By Nicolas Franceschini

For several decades, engineers and biologists have attempted to harness animals’ sensory-motor intelligence to build autonomous machines. In the May 2014 issue of Proceeding of the IEEE, devoted to Engineering Intelligent Electronic Systems Based on Computational Neuroscience, the author describes how microoptical, behavioral and electrophysiological experiments carried out on insects have led to building seven proof-of-concept robots equipped with insect-derived neuromorphic sensors and autopilots. These constructions in turn are used to better apprehend the clever tricks used by insects, which negotiate their complex environments with a level of agility that greatly outpasses that of both vertebrate animals and present-day aerospace robots.

Introduction

For designing efficient seeing vehicles and microvehicles capable of steering their way quickly through complex and ever-changing environments, the successful visual guidance system of humble flying creatures, such as flies and bees, may provide some unexpected solutions [1].

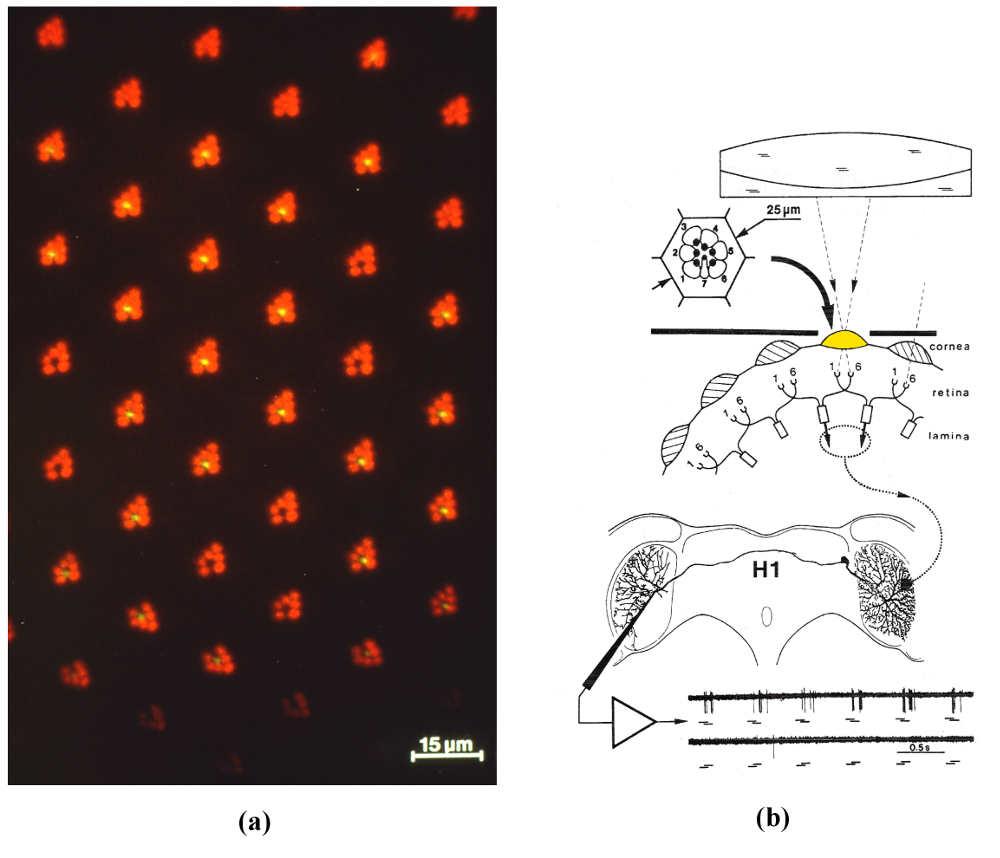

Figure 1: (a) Photoreceptor array of the housefly’s compound eye: seven micrometer-sized photoreceptor cells are visible in vivo in the focal plane of each facet lenslet (epifluorescence microscopy combined with corneal neutralization). (b) Principle of the experiments aimed at deciphering the inner processing structure of a motion detecting neuron. Sequential illumination of two single photoreceptors (R1 and R6) drives an EMD (dotted ellipse) conveying its outputs (dotted line) to the dendritic arbor of H1. Bottom part: a burst of spikes is elicited whenever the light sequence mimics motion in the preferred direction (R1 then R6, top trace). The opposite sequence (R6 then R1, bottom trace) elicits hardly any response (flash duration100ms, interstimulus interval 50ms) (From [1]).

Whether in insects or in humans, motion sensitive neurons are of ultimate importance for visually guided behavior [2] [4]. These neurons allow an animal to sense the Optic Flow (OF), which describes the speed of images streaming across its retina as the consequence of its own movement. Pioneering work on flies in the last few decades have identified ~ 50 directionally-selective motion sensitive neurons in a particular ganglion, the lobula plate [3]. These lobula plate tangential cells (LPTCs) are uniquely identifiable neurons, and a given recording can therefore be performed on the same neuron in different animals. The LPTCs act as wide-field optic flow sensors and are involved, in particular, in visual guidance [3-5]. Each LPTC possesses a large dendritic tree that receives the synaptic outputs from hundreds of Elementary Motion Detectors (EMDs) detecting motion locally, altogether covering a wide FOV. Since the time when Hassenstein and Reichardt proposed the “correlation scheme” for motion detection in the 1950’s, it has been a big challenge to figure out how a single EMD works.

The inner processing structure of an elementary motion detector (EMD)

To analyze the working of a single EMD, we felt we should stimulate a single EMD. Our group has developed several microoptical techniques that allow the micrometer-sized photoreceptors of the fly compound eye (Fig. 1a) to be accessed individually in vivo. With an incident light “microscope-telescope”, we illuminated two neighboring photoreceptors (R1 and R6) behind a single facet sequentially so as to produce an “apparent motion”, while recording the electrical activity of an identified LPTC (Fig. 1b) [6]. The selected LPTC was the H1 neuron, known to respond to horizontal back-to-front motion on the ipsilateral eye with an increase in the frequency of nerve impulses (“spikes”) [3]. H1 responded consistently to our optical microstimulation by a burst of spikes whenever the sequence mimicked motion in the preferred direction (R1 then R6) and hardly responded to the opposite sequence (R6 then R1) (see Fig. 1b, bottom traces). Carefully selected sequences of light flashes and/or light steps allowed us to identify the inner processing structure of an EMD (Fig. 2) [7].

Figure 2: (a) and (b) Sequential illumination of the photoreceptors R1->R6 drives the two cartridges A->B which conveys an excitatory signal (+) to the LPTC (H1). The box in the left arm describes a second-order low-pass filter, in agreement with its measured impulse response. The box in the right arm describes a first-order high-pass filter, in agreement with its measured its step response. (c) (black scheme): the responses observed suggested that the EMD is split into two subunits operating in parallel: an ON-EMD and an OFF-EMD sensing the motion of light edges and dark edges separately, and both conveying an excitatory signal to H1 [7] (the split EMD subunits represented here is that of the preferred direction). (colored letters): recent experiments involving the genetic silencing of lamina L1 and L2 neurons suggested that these two neurons are the front ends of the ON and OFF pathways [8]. Motion sensitivity is conveyed down to the LPTC via cells T4 and T5 [9] [11], which were recently found to be strongly directionally selective [14]. (From [1])

The EMD model we arrived at [7] consists of an elaborate “Hassenstein-Reichardt detector”, each subunit of which contains a second-order low-pass (LP) filter in one arm and a first-order high-pass (HP) filter in the other arm (Fig. 2a). The signal delivered by the LP-arm does not show up in the EMD response, but covertly facilitates the passage of the high-pass filtered signal from the second arm down to the wide-field LPTC. These single photoreceptor microstimulation studies further showed that each EMD subunit is split into two parallel ON and OFF pathways (Fig. 2b): the one detects the motion of bright edges, the other one captures the motion of dark edges separately [7] . This pecularity improves, among other things, the EMD’s refresh rate, as both the leading edge and the trailing edge of a moving object contribute to its response.

Recent neurogenetic and optogenetic studies on the fruitfly Drosophila have provided support for the existence of this split pathway [8]. Refined connectome analyses identified part of the neuronal chain from the EMD inputs (the two triangles in Fig. 2a, b) down to the EMD outputs on the dendrites of the LPTCs [9] [10] [11]. Calcium or patch-clamp recordings have allowed the response properties of some of the interneurons along the ON and the OFF pathways to be characterized [12] [13] down to the synaptic terminals of the EMDs on the dendritic arbor of an LPTC [14] . Other steps of the processing structure (Fig. 2c) await biological identification.

Robot Fly endowed with fly-inspired neuromorphic optic flow sensors

Inspired by the results of our single cell analyses of the fly EMD, our group designed and constructed miniature electro-optic EMDs early in the 80’s. Each EMD circuit actually comprised two parallel EMDs, each of which responded to the motion of either a light edge (ON) or a dark edge (OFF) (cf Fig. 2c). In 1991, a circular array of electro-optic OF sensors gave rise to the Robot Fly, which was the first autonomous mobile robot able to run to its target at a relatively high speed (0.5 m/s) while avoiding obstacles on the basis of OF sensors [15]. The Robot Fly analyzed the self-induced translational OF produced during each of its fly-like translatory steps and corrected its steering in a purely reactive way on the basis of the motion perceived during its previous translatory step. Vision was inhibited during rotations by a bio-inspired mechanism of “saccadic suppression”.

Figure 3: (a) OCTAVE is a 100-gram Micro-helicopter (MH) equipped with an OF regulator. A single EMD sensor pointing vertically downward was able to detect relative angular speeds occurring within the range of 40°/s to 400°/s, whatever the contrast m (down to m = 0.04). The tethered MH could be remotely pitched forward by a small angle while keeping its roll attitude. It was able to take off and circle at speeds of up to 3 m/s and heights of up to 3m over a large ring-shaped track (outside diameter: 4.5m) covered with stripes showing a random width and a random contrast. (b) Flight variables monitored during a 70-meter flight over the textured pattern, including take-off, level flight and landing. (bA) Flight path consisting of about six laps on the circular track. (bB) Monitored groundspeed. (bC) Monitored OF, which can be seen to have remained virtually constant (~ 3 rad/s) throughout the flight path. (a, photograph, courtesy of M. Vrignaud, b from [18]).

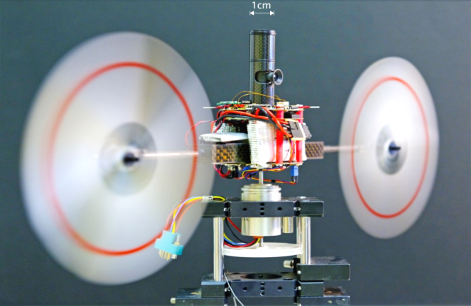

Robot OCTAVE endowed with an “optic flow regulator”

The 0.1kg aerial robot OCTAVE is a micro-helicopter able to take off, fly level, follow a slanted terrain, and land automatically [16], by relying exclusively on an OF sensor oriented downwards (i.e., a “ventral EMD”). The key to this aircraft’s visuo-motor guidance is a specific feedback control system (called the optic flow regulator) which strives to maintain the OF at a constant value: the OF set point. The OF based autopilot provides evidence that OF measurements are sufficient to make an agent fly safely, without the need to measure state variables such as groundspeed or groundheight. The effect of this autopilot is to maintain a safe clearance from the ground by automatically adjusting the groundheight at all times in proportion to the groundspeed – whatever the groundspeed. OCTAVE even performs over a platform moved both back-and-forth and up-and down [17]. This autopilot demands little in terms of electronic (or neural) implementation and is compatible with the pinhead size of the insect brain. In fact, OCTAVE accounts for a series of puzzling, seemingly unconnected flying abilities observed by many authors during the last 70 years in various insect species, which suggests that insects may well be equipped with an OF regulator [18].

Robot OSCAR II endowed with a retinal microscanner and a vestibulo-ocular reflex

OSCAR II (Fig. 4) is a twin-engine seeing robot whose eye possesses a microscanning retina inspired by a process we observed in flying flies [19]. We established that a retinal microscanner combined with an EMD lead to a highly accurate Position Sensing Device (PSD). This visual PSD can, for instance, locate an edge with a resolution 70-fold finer than the interreceptor angle, and is therefore endowed with hyperacuity [20]. Flies may therefore in some cases be able to detect and locate targets with a much greater accuracy than that imposed by the coarse sampling of their compound eye. OSCAR II features both a visual and inertial feedback control system. As in flies (and vertebrate animals) the eye is mechanically decoupled from the body. Two oculomotor reflexes, the visual fixation reflex (VFR) and the vestibulo-ocular reflex (VOR), stabilize the robot’s gaze towards the target (a grey edge or a bar) against disturbances affecting the body. The heading control system strives to realign the robot’s heading with the gaze and, hence, with the visual target. OSCAR II was shown to keep its gaze and its heading steadily locked on a drifting target, in spite of the violent perturbations (erratic gusts of wind up to 5m/s) applied to one of its propellers. The lightness and low power consumption of this accurate visuo-inertial control system would make it suitable for applications to micro-air vehicles, which are prone to many aerodynamic disturbances. Biological creatures teach us that it is best to compensate early on for such disturbances.

Figure 4: OSCAR II is a tethered aerial robot that orients its heading about the vertical (yaw) axis by driving its two propellers differentially, based on what it sees. The microscanning eye of OSCAR II is mechanically decoupled from the body. The central eye-tube bearing the lens and the two-pixel microscanning retina (f=10Hz) was inserted into a larger carbon tube (diameter 10mm) mounted firmly on the robot’s body. The eye tube was able to rotate about the yaw axis at a high speed by means of a micro-voice coil motor. The robot was mounted here on a low-friction, low-inertia resolver with which its heading could be monitored accurately (photo courtesy of F. Vrignaud) (From [21]).

Conclusion

Insects are able to achieve a great deal with very few resources: few pixels in the eye, and few neurons in the brain. Despite the technical challenges to record the electrical activity of minute neurons in highly complex neuropils, and the need to restrict stimulation to particular neural circuits to analyze their working, the insect brain can teach us some lessons in sensory-motor control. Some of the principles discovered in the small insect brain can be scaled up and applied to artificial agents, as exemplified here with optic flow sensors and visuo-inertial autopilots. Richard Feynman’s statement: “What I can’t create, I don’t understand” is a strong impetus to create what we claim we understand, and the best way to raise new questions by bringing a principle face to face with the real physical world.

Acknowledgment

This work was supported in part from the C.N.R.S., the Aix-Marseille University, the French National Research Agency (ANR), the French Government Defense Agency (DGA), and the European Union under grants Codest, Esprit, TMR and IST-FET. The author is indebted to A. Riehle, J.M. Pichon, C. Blanes, M. Boyron, S. Viollet, F. Ruffier, and L. Kerhuel for their excellent collaboration.

For Further Reading

1. N. Franceschini, “Small brains, smart machines: from fly vision to robot vision and back again” Proc. of the IEEE, Vol. 102, no5, pp. 751-781, May 2014.

2. A. Borst and T. Euler, “Seeing things in motion: models, circuits and mechanisms, Neuron, Vol. 71, pp. 974-994, 2011.

3. K. Hausen, “The lobula complex of the fly: structure, function and significance in visual behaviour”, in: Photoreception and vision in invertebrates, M. Ali Ed., New-York: Plenum, pp. 523-559, 1984.

4. K. Hausen and M. Egelhaaf, “Neural mechanisms of visual course control in insects”, in Facets of Vision, R. C. Hardie and D. G. Stavenga, Eds., Berlin: Springer, 1989, pp. 391-424.

5. H. G. Krapp, Neuronal matched filters for optic flow processing in flying insects, Int. Rev. Neurobiol. Vol. 44, pp. 93-120, 2000.

6. A. Riehle and N. Franceschini, “Motion detection in flies: parametric control over ON-OFF pathways”, Exp. Brain Res., vol. 54, no 2, pp. 390-394, 1984.

7. N. Franceschini, A. Riehle and A. Le Nestour, “Directionally selective motion detection by insect neurons”, in: Facets of Vision, D.G. Stavenga and R.C. Hardie, Eds. Berlin: Springer, pp. 360-390, 1989.

8. M. Joesch, B. Schnell, S.V. Raghu, D.F. Reiff and A. Borst, “ON and OFF pathways in Drosophila motion vision”, Nature, vol. 468, pp. 300-304, 2010.

9. B. Bausenwein, A.P.M. Dittrich and K.P. Fischbach, “The optic lobe of Drosophila melanogaster II. Sorting of retinotopic pathways in the medulla”, Cell tissue Res. Vol. 267, pp. 17-28, 1992.

10. K. Shinomiya, T. Karuppudurai, T.Y. Lin, Z. Lu, C.H. Lee and I.A. Meinerthagen, “Candidate neural substrates for off-edge motion detection in Drosophila“, Curr. Biol. Vol. 24, April 2014.

11. I.A. Meinerthagen, “The anatomical organization of the compound eye’s visual system”, in: Behavioral genetics of the fly (Drosophila melanogaster). J. Dubnau Ed., Cambridge Univ. Press, 2014.

12. M. Meier, E. Serbe, M.S. Maisak, J. Haag, B.J. Dickson, and A. Borst, “Neural Circuit Components of the Drosophila OFF Motion Vision Pathway”, Curr. Biol. 24, 385-392, 2014.

13. R. Behnia, D.A. Clark, A.G. Carter, T.R. Clandinin and C. Desplan, “Processing properties of ON and OFF pathways for Drosophila motion detection”, Nature, June 2014, in press.

14. M.S. Maisak et al., “A directional tuning map of Drosophila elementary motion detectors”, Nature vol. 500, pp. 212-216, Aug. 2013.

15. N. Franceschini, J. M. Pichon, and C. Blanes, “From insect vision to robot vision”, Phil. trans. Roy. Soc. London. B, vol. 337, no. 1281, pp. 283-294, 1992.

16. F. Ruffier and N. Franceschini, “Optic flow regulation: the key to aircraft automatic guidance”, Robot. Autonom. Syst., vol. 50, no. 4, pp. 177-194, 2005.

17. F. Ruffier and N. Franceschini, “Optic flow regulation in unsteady environments: a tethered MAV achieves terrain following and targeted landing over a moving platform”, J. Intell. Rob. Syst., 2014, in press.

18. N. Franceschini, F. Ruffier, and J. Serres, “A bio-Inspired flying robot sheds light on insect piloting abilities”, Curr. Biol., vol. 17, no 4, pp. 329-335, 2007.

19. N. Franceschini and R. Chagneux, “Repetitive scanning in the fly compound eye”, in Göttingen Neurobiol. Rep., Stuttgart, Germany: G. Thieme, 1997, p. 279.

20. S. Viollet and N. Franceschini, “A hyperacute optical position sensor based on biomimetic retinal microscanning”, Sensors and Actuators A, vol. 160, pp. 60-68, 2010.

21. L. Kerhuel, S. Viollet and N. Franceschini, “Steering by gazing: An efficient biomimetic control strategy for visually guided micro aerial vehicles”, IEEE Trans. Rob., Vol. 26, no2, pp. 307-319, Apr. 2010.

Contributor

Nicolas Franceschini received the Doctorat d’Etat degree in physics from the University of Grenoble and National Polytechnic Institute, Grenoble, France. He was appointed as a Research Director at the C.N.R.S. and set up the Neurocybernetics Lab, and later the Biorobotics Lab in Marseille, France. His research interests include neural information processing, vision, eye movements, microoptics, neuromorphic circuits, sensory-motor control systems, biologically-inspired robots and autopilots. Read more

Nicolas Franceschini received the Doctorat d’Etat degree in physics from the University of Grenoble and National Polytechnic Institute, Grenoble, France. He was appointed as a Research Director at the C.N.R.S. and set up the Neurocybernetics Lab, and later the Biorobotics Lab in Marseille, France. His research interests include neural information processing, vision, eye movements, microoptics, neuromorphic circuits, sensory-motor control systems, biologically-inspired robots and autopilots. Read more

Mark D. McDonnell is a Senior Research Fellow at the Institute for Telecommunications Research, University of South Australia, where he is Principle Investigator of the Computational and Theoretical Neuroscience. He received a PhD in Electronic Engineering from The University of Adelaide, Australia His interdisciplinary research focuses on the application of computational and engineering methods to advance scientific knowledge about the influence of noise and random variability in brain signals and structures during neurobiological computation.

Mark D. McDonnell is a Senior Research Fellow at the Institute for Telecommunications Research, University of South Australia, where he is Principle Investigator of the Computational and Theoretical Neuroscience. He received a PhD in Electronic Engineering from The University of Adelaide, Australia His interdisciplinary research focuses on the application of computational and engineering methods to advance scientific knowledge about the influence of noise and random variability in brain signals and structures during neurobiological computation.  Nicolas Franceschini received the Doctorat d'Etat degree in physics from the University of Grenoble and National Polytechnic Institute, Grenoble, France. He was appointed as a Research Director at the C.N.R.S. and set up the Neurocybernetics Lab, and later the Biorobotics Lab in Marseille, France. His research interests include neural information processing, vision, eye movements, microoptics, neuromorphic circuits, sensory-motor control systems, biologically-inspired robots and autopilots.

Nicolas Franceschini received the Doctorat d'Etat degree in physics from the University of Grenoble and National Polytechnic Institute, Grenoble, France. He was appointed as a Research Director at the C.N.R.S. and set up the Neurocybernetics Lab, and later the Biorobotics Lab in Marseille, France. His research interests include neural information processing, vision, eye movements, microoptics, neuromorphic circuits, sensory-motor control systems, biologically-inspired robots and autopilots.  Mostafa Rahimi Azghadi is a PhD candidate in the University of Adelaide, Australia. His current research interests include neuromorphic learning systems, spiking neural networks and nanoelectronic.

Mostafa Rahimi Azghadi is a PhD candidate in the University of Adelaide, Australia. His current research interests include neuromorphic learning systems, spiking neural networks and nanoelectronic.  Giacomo Indiveri is an Associate Professor at the Faculty of Science, University of Zurich, Switzerland. His current research interests lie in the study of real and artificial neural processing systems and in the hardware implementation of neuromorphic cognitive systems, using full custom analog and digital VLSI technology.

Giacomo Indiveri is an Associate Professor at the Faculty of Science, University of Zurich, Switzerland. His current research interests lie in the study of real and artificial neural processing systems and in the hardware implementation of neuromorphic cognitive systems, using full custom analog and digital VLSI technology.  Derek Abbott is a full Professor within the School of Electrical and Electronic Engineering at the University of Adelaide, Australia. His interests are in the area of multidisciplinary physics and electronic engineering applied to complex systems.

Derek Abbott is a full Professor within the School of Electrical and Electronic Engineering at the University of Adelaide, Australia. His interests are in the area of multidisciplinary physics and electronic engineering applied to complex systems.  Terrence C. Stewart received a Ph.D. degree in cognitive science from Carleton University in 2007. He is a post-doctoral research associate in the Department of Systems Design Engineering with the Centre for Theoretical Neuroscience at the University of Waterloo, in Waterloo, Canada. His core interests are in understanding human cognition by building biologically realistic neural simulations, and he is currently focusing on language processing and motor control.

Terrence C. Stewart received a Ph.D. degree in cognitive science from Carleton University in 2007. He is a post-doctoral research associate in the Department of Systems Design Engineering with the Centre for Theoretical Neuroscience at the University of Waterloo, in Waterloo, Canada. His core interests are in understanding human cognition by building biologically realistic neural simulations, and he is currently focusing on language processing and motor control.  Chris Eliasmith received a Ph.D. degree in philosophy from Washington University in St. Louis in 2000. He is a full professor at the University of Waterloo. He is currently Director of the Centre for Theoretical Neuroscience at the University of Waterloo and holds a Canada Research Chair in Theoretical Neuroscience. He has authored or coauthored two books and over 100 publications in philosophy, psychology, neuroscience, computer science, and engineering venues.

Chris Eliasmith received a Ph.D. degree in philosophy from Washington University in St. Louis in 2000. He is a full professor at the University of Waterloo. He is currently Director of the Centre for Theoretical Neuroscience at the University of Waterloo and holds a Canada Research Chair in Theoretical Neuroscience. He has authored or coauthored two books and over 100 publications in philosophy, psychology, neuroscience, computer science, and engineering venues.