Building Brain Machine Interfaces – Neuroprosthetic Control with Electrocorticographic Signals

By Nitish Thakor

A prosthesis revolution is underway. New limb prostheses deploy very advanced materials and robotic technology. For instance, dexterous upper limbs designed by John’s Hopkins University’s Applied Physics Labratory and a commercial company, DEKA, display 22 degrees of freedom. But along with this is a revolution in the means of control of limbs. Implantable neural interface technologies and methods will allow the wearer of the limb to more naturally move the limb.

A prosthesis revolution is underway.

Whether the technology is the upper limb or the lower limb prostheses, they all now deploy very advanced materials and robotic technology. For example, revolutionary dexterous upper limbs have been designed by the Johns Hopkins University’s Applied Physics Laboratory and the DEKA company. The JHU APL’s Modular Prosthetic Limb (MPL) is being further developed for potential commercialization by HDT International. The MPL from JHU/APL is a remarkable technology, displaying displaying 17 degrees of freedom and 26 articulating joints in an anthropomorphic limb (Figure 1).

Figure 1: A graphic of the recent generation of Johns Hopkins University/Applied Physics Lab prototype Modular Prosthetic Limb.

As advanced as these limbs are, their control strategies are still quite rudimentary.

A paralyzed individual who has lost an upper limb would presently use a mechanically powered or myoelectrically controlled prosthetic limb. Control strategy based on body movement or muscle signals is quite unnatural and provides only very limited degrees of freedom to control these advanced prosthetic limbs. Hence, the interest in futuristic brain machine interface, wherein the prosthetic limb would be directly actuated and controlled by the brain signals.

That future is here. That, to put it simply, the solution lies in our brains. Brain waves, that is.

Brain waves carry important and highly relevant information pertaining to the control of the prosthetic limb. The challenge has been to record the brain waves, be they from neurons directly or from the whole brain, that is, from the scalp. The related challenge has been to decipher or decode the brain waves. It would be instructive to understand what these signal sources are and how they might be recorded, before we figure out how we might decode them for building a brain machine interface.

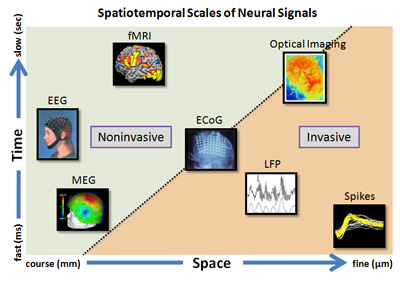

Figure 2: Rendering of different signals from the brain potentially usable for brain machine interface. The graphic illustrates the temporal and spatial scales of each signal. For example EEG and MEG are noninvasive, clinically relevant signals but have coarse spatial resolution. fMRI and optical imaging are primarily imaging modalities. The ECoG, LFP and neural spike activities are recorded essentially using invasive means but offer the best temporal and spatial resolutions. (Courtesy: Dr. V. Aggarwal).

As illustrated in Figure 2, at the most microscopic or reductionist level, the signals are produced by neurons, namely the action potentials (also commonly termed as ‘spikes’). Microelectrodes inserted into brain and in the vicinity of neurons pick up the spikes. The filtered versions of the neural activity are the local field potentials. These very fine signals carry the message of individual or population of neurons that code for movement of the limb.

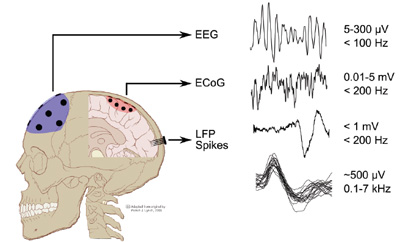

At a more macroscopic level, we have two options: to record electrocorticographic (ECoG) signal or the Electroencephalogram (EEG) (Figure 3). The ECoG signal is recorded by placing an array of electrodes directly on brain surface (of course, this requires brain surgery!), while the EEG signals are recorded by placing an array of electrodes on the scalp (noninvasive, and hence convenient, but alas, signals are small and can be noisy). Once these microscopic or macroscopic signals are acquired, they need to be digitally filtered and signal processed to derive useful information. Therein lies the major challenge for neuroengineers — to decode the neural signals for control of more than one degree of freedom of the prosthetic limb.

Figure 3: Different signals from the brain, from macroscopic, namely EEG and ECoG, to microscopic signals, namely local field potentials, LFPs, and action potentials or spikes. (Courtesy Dr. M. Mollazadeh).

So how does the basic brain machine interface work?

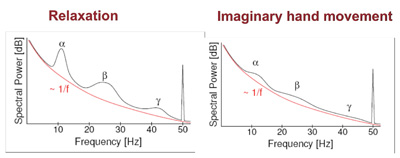

Considerable research has gone into developing a wide array of algorithms for analysis of EEG signals as well as ECoG signals. The central idea generally tends to be to carry out some level of spectral analysis to capture modulation of the brain rhythm associated with actual or imagined movements (Figure 4).

Figure 4: The basic idea behind analysis noninvasive signals for building brain machine interface. Imagined movement results in a modulation of the cortical rhythm in different frequency bands.

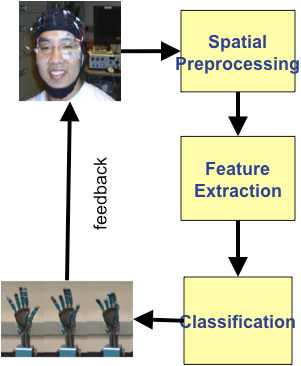

Great deal of signal preprocessing is needed. For example, beyond acquisition of the EEG (or ECoG) signals lies the step of selecting the best electrodes located in the somatosensory cortical regions that convey the modulation of cortical rhythms. This may be accomplished through various spatial processing schemes to localize the signal source. The next step is to extract features that code for the desired hand movements, such as opening or closing of the hand, or movement of individual fingers. The final step would be to classify different movements, such as reach or different types of grasps. This whole design should work in a “closed loop,” as modulation of brain rhythm is used to derive signals to control the prosthetic or robotic arm, but the loop is closed through visual perception – which in turn would modulate brain rhythms (Figure 5). Importantly, all computation and execution must occur in a real time fashion.

Figure 5: Building a Brain Machine Interface system through the steps of EEG/ECoG preprocessing, spatial localization of best electrodes, extracting and classifying features of movement control and finally closing the loop through visual feedback.

The Electrocorticogram (ECoG) signal

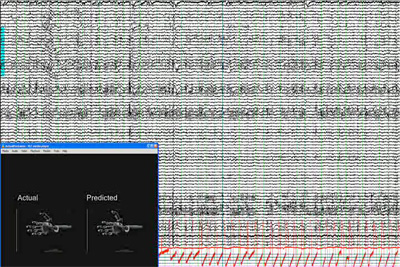

The ECoG signals, recording directly from brain surface (subdurally) have the essential characteristics of an EEG. As Figure 6 illustrates a large number of ECoG signals are simultaneously recorded and “decoded”. That is, to build the brain machine interface (BMI) for control of neural prosthesis, significant signal processing is required to interpret relevant signal changes in the ECoG signals and then derive control or command signals for the neuroprosthesis (see the inset showing actual and decoded hand and finger movements).

In previously published work from our group at Johns Hopkins University, low frequency “local motor potential” signals from human subjects, recorded during slow grasping motions of the hand were decoded using simple linear models. A relatively small number of electrodes were sufficient to decode and track the movement of individual fingers, pointing the way to neural control of dexterous prosthetic fingers. On the other hand, other features of the ECoG signals in the high gammaband (70-100 Hz and 100-150 Hz) provide the best performance for decoding grasp aperture.

Figure 6: Simultaneous recording of 64 channels of ECoG signals with the inset showing a simulated prosthetic limb with actual and predicted finger movement of the hand (courtesy Drs. N. Crone and S. Acharya).

What goes on inside the brain when a subject initiates the limb movement?

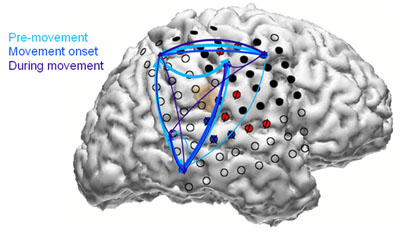

Limb movement is the result of a very complex orchestration and processing in the brain – from planning or pre-movement to movement onset to actual execution (as illustrated in Figure 7). This is of course a coarse top down view that one would get simply by putting the ECoG electrodes on the brain surface. Our ability to interpret the full orchestra of brain’s function in carrying out any dexterous movement is quite limited right now. Most of the studies are being done on patients scheduled for therapy or surgery for treatment of epilepsy, and in view of this condition and the need, this patient population is not the most suited. Generally amputees may not readily agree to a brain surgery for cortical control of prosthesis. A recent work from University of Pittsburgh involved one paralyzed subject, pointing to potentially ethically justified and willing subject population. Nevertheless, considerable work will be needed to prove the safety and efficacy before more widespread clinical trials can occur.

Figure 7: A graphic showing different electrodes active at different times during the three phases of movement of a limb. The circles are electrodes and the colored arrows show flow of signal generation (courtesy Heather Benz).

This is an exciting time for the field, with several groups are involved in technology demonstration and feasibility testing of using the ECoG signals for neuroprosthesis . These include our group at the Johns Hopkins University, and the others at University of Pittsburgh, Washington University, St. Louis, University of Washington, Seattle, and Wadsworth Center in New York. After the pioneering investment by the Defense Advanced Research Project Agency, National Institutes of Health has stepped in to support the neural prosthesis research and hence further demonstrations of this brain machine interface technology should be forthcoming.

For Further Reading

A.B. Schwartz, X.T. Cui, D.J. Weber, and D.W. Moran, “Brain controlled interfaces: movement restoration with neural prosthetics,” Neuron, vol. 52, (no. 1), pp. 205-20, Oct 5, 2006.

L.R. Hochberg, M.D. Serruya, G.M. Friehs, J.A. Mukand, M. Saleh, A.H. Caplan, A. Branner, D. Chen, R.D. Penn, and J.P. Donoghue, “Neuronal ensemble control of prosthetic devices by a human with tetraplegia,” Nature, vol. 442, (no. 7099), pp. 164-71, Jul 13 2006.

E.C. Leuthardt, G. Schalk, J.R. Wolpaw, J.G. Ojemann, and D.W. Moran, “A brain-computer interface using electrocorticographic signals in humans,” J Neural Eng., vol. 1, (no. 2), pp. 63-71, Jun 2004.

K.J. Miller, G. Schalk, E.E. Fetz, M. den Nijs, J.G. Ojemann, and R.P. Rao, “Cortical activity during motor execution, motor imagery, and imagery-based online feedback,” Proc Natl Acad Sci U S A, vol. 107, (no. 9), pp. 4430-5, Mar 2 2010.

M. Fifer, S. Acharya, H. Benz, M. Mollazadeh, N. Vrone, and N. V. Thakor, “Towards Electrocorticographic control of dexterous upper limb prosthesis,” IEEE Pulse, 2012.

J. Carmena, “Becoming bionic: the new brain-machine interfaces that exploit plasticity of the brain may allow people to control prosthetic devices in a natural way,” IEEE Spectrum, pp. 24-29, March, 2012.

University of Pittsburgh video

Press release with picture/summary

Bin He is a Distinguished McKnight University Professor, a Professor of Biomedical Engineering, Electrical Engineering, and Neuroscience, and Director of Biomedical Functional Imaging and Neuroengineering Laboratory at the University of Minnesota.

Bin He is a Distinguished McKnight University Professor, a Professor of Biomedical Engineering, Electrical Engineering, and Neuroscience, and Director of Biomedical Functional Imaging and Neuroengineering Laboratory at the University of Minnesota.  Mathukumalli Vidyasagar received B.S., M.S. and Ph.D. degrees in electrical engineering from the University of Wisconsin in Madison, in 1965, 1967 and 1969 respectively. Between 1969 and 1989, he was a professor of Electrical Engineering at various universities in the USA and Canada.

Mathukumalli Vidyasagar received B.S., M.S. and Ph.D. degrees in electrical engineering from the University of Wisconsin in Madison, in 1965, 1967 and 1969 respectively. Between 1969 and 1989, he was a professor of Electrical Engineering at various universities in the USA and Canada.  Nitish V. Thakor is a Professor of Biomedical Engineering at Johns Hopkins University, Baltimore, USA, as well as the Director of the newly formed institute for neurotechnology, SiNAPSE, at the National University of Singapore.

Nitish V. Thakor is a Professor of Biomedical Engineering at Johns Hopkins University, Baltimore, USA, as well as the Director of the newly formed institute for neurotechnology, SiNAPSE, at the National University of Singapore.  Luke P. Lee is a 2010 Ho-Am Laureate. He is an Arnold and Barbara Silverman Distinguished Professor of Bioengineering at UC Berkeley, the Director of the Biomedical Institute of Global Healthcare Research & Technology (BIGHEART) and a co-director of the Berkeley Sensor & Actuator Center.

Luke P. Lee is a 2010 Ho-Am Laureate. He is an Arnold and Barbara Silverman Distinguished Professor of Bioengineering at UC Berkeley, the Director of the Biomedical Institute of Global Healthcare Research & Technology (BIGHEART) and a co-director of the Berkeley Sensor & Actuator Center.