Wearable Computing

By Daniel Roggen, Stéphane Magnenat, Markus Waibel, and Gerhard Tröster

NOTE: This is an abstract of the entire article, which appeared in the June 2011 issue of the IEEE Robotics & Automation magazine.

Click here to read the entire article.

In robotics, activity-recognition systems can be used to label large robot-generated activity data sets, and to enable activity-aware human-robot interactions (HRIs). It also opens ways to self-learning autonomous robots. The recognition of human activities from body-worn sensors is a key paradigm in wearable computing. In this field, the variability in human activities, sensor deployment characteristics, and application domains has led to the development of best practices and methods to enhance the robustness of activity-recognition systems. The article reviews the activity-recognition principles followed in the wearable computing community and the methods recently proposed to improve their robustness. These approaches aim at the seamless sharing of activity-recognition systems across platforms and application domains.

Activity recognition supports natural HRI. Activities can be inferred from sensors worn by the user and broadcasted to the surrounding robots. Typical applications are in the domain of assistive robotics. Activity recognition for assistive uses is the typical aim of wearable computing.

Activity recognition in wearable computing is challenging because of a high variability along multiple dimensions: human action-motor strategies are highly variable, the deployment of sensors at calibrated locations is challenging, and the environments where systems are deployed are usually open ended.

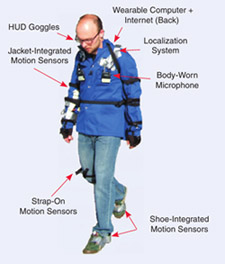

Figure 1. A typical wearable-computing system. The system comprises a see-through head-up display (HUD) in the goggles

to provide the users with a contextual information, an Internet connection, an on-body computer, and sensors to infer the

user’s context, such as his activities and location.

Wearable computing, as originally presented in 1996, emphasized a shift in computing paradigm. Computers would no longer be machines separate from the persons using them. Instead, they would become an unobtrusive extension of our bodies, providing us with additional sensing, feedback, and computational capabilities. As implied by its name, wearable computing never considered implanting sensors or chips into the body. Rather, it emphasizes the view that clothing, which has become an extension of our natural skin, would be the substrate that technology could disappear into (Figure 1). The prevalence of mobile phones now offers an additional vector for on-body sensing and computing.

The paper includes referenced lists of applications for wearable compuing and examples of activities that can be recognized.

Wearable Computing

Activity and gesture recognition are generally tackled as a problem of learning by demonstration. The user is instrumented with the selected sensors and is put into a situation where he performs the activities and gestures of interest.

Sensors are used to acquire signals related to the user’s activities or gestures. User comfort is paramount. Thus, the sensors must be small, unobtrusive, and ideally invisible to the outside. Common sensor modalities are body-worn accelerometers and IMUs. Accelerometers are extremely small and have low power. The IMUs contain accelerometers, magnetometers, and gyroscopes, which allow to sense the orientation of the device with respect to a reference. The article describes the sensors that are being incorporated in wearable computing.

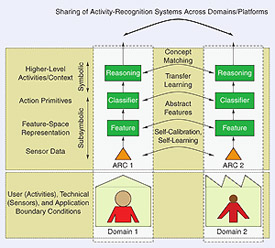

The activity-recognition chain (ARC) is referred to as a set of processing principles commonly followed by most researchers to infer human activities from the raw sensor data. Subsymbolic Processing maps the low-level sensor data (e.g., body-limb acceleration) to semantically meaningful action primitives (e.g., grasp). Symbolic Processing then maps the sequences of action primitives (e.g., grasping and cutting) to higher-level activities (e.g., cooking).

Sharing Activity-Recognition Systems

Human activity recognition in wearable computing is challenging because of a large variability in the mapping of sensor signals to activity classes. (The origins of this variability are discussed in the article.)

To share an ARC, there must be a common representation at some stage in the recognition chain.

Figure 2. Representation of the level at which a common representation is assumed to share a recognition system

between users (platforms) or domains.

There are 4 levels at which ARC’s can be shared:

- Sensor-Level Sharing

- Feature-Level Sharing

- Classifier-Level Sharing

- Symbolic-Level Sharing

In addition, recognition is given in the article to other approaches to ARC, and the challenges which still address ARC are listed.

ACKNOWLEDGMENTS

The authors acknowledge the financial support of EU FP7 under the project OPPORTUNITY with grant number 225938 and RoboEarth with grant number 248942.

AUTHORS

Daniel Roggen, (droggen@gmail.com) Wearable Computing Laboratory, Swiss Federal Institute of Technology, Zürich, Switzerland.

Stéphane Magnenat, Autonomous Systems Laboratory, Swiss Federal Institute of Technology, Zürich, Switzerland.

Markus Waibel, Institute for Dynamic Systems and Con- trol, Swiss Federal Institute of Technology, Zürich, Switzerland.

Gerhard Tröster, Wearable Computing Laboratory, Swiss Federal Institute of Technology, Zürich, Switzerland.