Eye, Robot: Embedded vision, the next big thing in digital signal processing

By Brian Dipert and Amit Shoham

NOTE: This is an overview of the entire article, which appeared in the Spring 2012 issue of the IEEE Solid-State Circuits Magazine.

Click here to read the entire article.

Full-featured, power-stingy, and low-cost processors, image sensors, and other system building blocks are poised to make embedded vision the next digital signal processing success story. Fueled by high-volume consumer electronics applications, expanding to a host of other markets, and brought to life by a worldwide alliance of suppliers and system developers, the technology is sure to fulfill its promise in the near future.

Embedded vision, the next likely digital signal processing success story, refers to machines that understand their environment through visual means. It leverages (for example) the sensors and SoCs in the previously mentioned digital cameras in taking processing to the next level: interpreting meaning from and responding to the information in the captured frames. The authors use the term embedded vision to refer to any image sensor-inclusive, microprocessor-based system that isn’t a general-purpose computer. It could be applied to a smart phone, a tablet computer, a surveillance system, an earthbound or flight-capable robot, a vehicle containing a 360-degree suite of cameras, or a medical diagnostic device. Or it could be a wired or wirelessly tethered user interface peripheral.

Embedded vision can alert you to a child struggling in a swimming pool and to an intruder attempting to enter your residence or business. It can warn you of impending hazards on the roadway around you and even prevent you from executing lane-change, acceleration, and other maneuvers that could be hazardous to yourself and others. It can equip a military drone or other robot with electronic “eyes” that enable limited or even fully autonomous operation. It can assist a human physician in diagnosing a patient’s illness. state. And, in conjunction with GPS, compass, accelerometer, gyro, and other sensors, it can present a data-augmented representation of the scene encompassed by the image sensor’s field of view. These and innumerable other applications provide ripe opportunities for participants in all areas of the embedded vision ecosystem, computer vision veterans and new entrants alike.

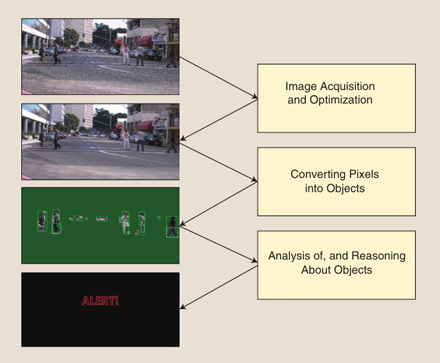

A typical embedded vision processing flow consists of three main functional stages:

- image acquisition and optimization

- converting pixels into objects

- analysis of – and reasoning about – these objects

The embedded vision application pipeline spans three primary stages, whose respective functions extensively harness digital signal processing algorithms.

Each stage in the overall process leverages a substantial amount of digital signal processing.

The article describes the individual functions involved in each of these stages. For instance, some functions in the last stage (‘Analysis of, and Reasoning About Objects’) are:

- object movement tracking

- object classification (e.g., determining if an object is a person, a vehicle, or something else)

- object recognition (e.g., determining whose face has been detected)

- obstacle detection (deciding whether an object lies in our path)

- behavior recognition (e.g., identifying a hand-gesture command)

- behavior classification (e.g., understanding whether a person is walking, sitting, standing, or lying down)

(The last function suggests a medical application in home monitoring of elderly who may be prone to falling.)

The authors mention the role of the Embedded Vision Alliance (EVA), which is “a collaboration to enable rapid growth of computer vision features in embedded systems”, in helping to transform the potential of embedded vision into reality.

ABOUT THE AUTHORS

Brian Dipert is a senior analyst with Berkeley Design Technology, Inc. (BDTI) and the editor in chief of the Embedded Vision Alliance. Prior to joining BDTI and the EVA, he spent more than 14 years as a senior technical editor at EDN Magazine; before joining EDN, he spent three years at Magnavox Electronic Systems Company and eight years at Intel Corporation. He holds a bachelor’s degree in electrical engineering from Purdue University.

Amit Shoham is an distinguished engineer with BDTI. At BDTI, he provides technical leadership on a wide variety of projects focused on evaluating, improving, and creating processors, tools, algorithms, software, and system designs for embedded digital signal processing applications. He holds a bachelor’s degree in computer systems engineering and a master’s degree in electrical engineering, both from Stanford University.