Building Brain Machine Interfaces – Speech, Language and Communication

By Nitish Thakor

Prosthetic limb technology has been revolutionized with the development of the anthropomorphic prosthetic arm with over 20 degrees of freedom. While this is truly remarkable technology, its intuitive control can be challenging. The first article in this series reviewed brain machine interface (BMI) technology, methods to decode brain signals, and strategies for controlling the dexterous, multiple degree of freedom prosthetic limb. Additional applications of BMI technology have begun to appear – from using neural signals to control wheel chairs and computers, to brain interfaces for speech and communication, to reverse engineering brain activity for interpreting higher brain functions.

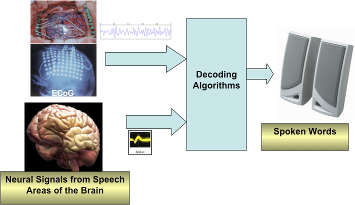

This article summarizes the recent evidence that BMI may be a valid and potentially useful approach to understanding the brain’s control of speech and language. BMIs for speech record and interpret brain waves from areas of the brain that control speech production and language comprehension. Figure 1 below presents a schematic of a generic speech BMI. The remainder of this article presents selected reports that demonstrate the feasibility of speech BMI.

Figure 1: Diagram of a speech BMI. Electrocorticogram (ECoG) signals from electrodes located over the speech areas (top path) or the neural spike signals recorded with implanted microelectrodes (bottom path) are decoded to reverse engineer speech. The current procedure is to relate a specific set of ECoG signal patterns to a selected library of spoken words, but the dream is to develop a sufficient “vocabulary” of spoken words to reverse engineer speech from brain rhythms (graphics assembled from various posted or published pictures).

Brain Areas Involved in Hearing and Speech

Primary auditory cortex (A1) – Generates a faithful representation of the spectro-temporal properties of the sound waveform, processes acoustic cues relevant for speech perception, such as formants, formant transitions, and syllable rate.

Posterior superior temporal gyrus (pSTG), (part of classical Wernicke’s area) – Thought to play a critical role in the transformation of acoustic information into phonetic and pre-lexical representations. Believed to process spectro-temporal features essential for auditory object recognition.

Left Inferior Frontal Gyrus (LIFG) (traditionally considered Broca’s area) – It is commonly assumed that Broca’s area is devoted to language production rather than language comprehension. Recent investigations also suggest that Broca’s area is involved in various cognitive and perceptual tasks.

Can spoken words be decoded from brain rhythms?

Kellis et al report decoding spoken words using local field potentials (LFP). ECoG and LFP signals are recorded from near or on the brain surface. Both provide highly specific information. Dr. Greger’s team at University of Utah used “micro-ECoG” electrodes, which are closely spaced electrodes. Their work shows the potential to classify articulated words from surface LFPs recorded using micro-ECoG grids. They carried out spectrogram analysis on all channels of the micro-ECoG electrodes. They constructed a large matrix of electrodes and trials and then used the method of principal component analysis for classification. Notably, some electrodes were on the face motor cortex (FMC) area while others on Wernicke’s area (see inset). They found that the FMC area was active during a speech task while Wernicke’s area was active during conversation. Classification was better from the FMC than from Wernicke’s area locations – possibly because the FMC area is involved in controlling the musculature of speech and that signal was more robust. Wernicke’s area is active in conversation, but the ability to decode speech reception or word or sentence repetition, known attributes of this area, was not explored. Clearly more work remains to be done to decipher speech from ECoG signals.

Can spectro-temporal auditory features of spoken words and continuous sentences be reconstructed from brain rhythms?

Pasley et al utilized recordings from non-primary auditory cortex in the human (a region called superior temporal gyrus) to reverse engineer the cortical signals of sound and speech. Their approach, using a linear model derived from the spectrogram of the ECoG signal, first decoded the slow temporal fluctuations corresponding to syllables. The simple algorithm was not sufficient for fast changes in the speech pattern, and this problem required nonlinear methods (temporal modulation of energy). Their work demonstrates the proof of concept and feasibility of decoding cortical rhythms coincident with sounds and speech, and serves as a building block for some day reverse-engineering sounds and speech imagined within the brain. According to the authors, “The results provide insights into higher order neural speech processing and suggest it may be possible to readout intended speech directly from brain activity.”

Spectrum and Spectrogram

The most common way to analyze brain waves, or the electroencephalogram (EEG) or electrocorticogram (ECoG) is to calculate the spectrum, i.e. decompose the signal into its constituent frequency bands. Neurologists are most accustomed to decomposing EEG into constituent θ, δ, α, β, and γ bands and use powers and features in these bands for clinical interpretation. However, when the signals are time varying, as the EEG or speech signals are, the spectrogram is the most commonly utilized tool. Modern robust methods of analyzing time varying signals also include time-frequency analysis wavelet transforms.

Linear and Nonlinear Methods

The linear model assumes a linear mapping between neural responses and the auditory spectrogram.

The nonlinear model assumes a linear mapping between neural responses and the modulation representation, but the modulation representation itself is a nonlinear transformation of the spectrogram.

Can semantic information be decoded from brain rhythms?

Decoding or deciphering higher order brain activities may be even more interesting – as this work lays the path towards a “cognitive” BMI that may be used for advanced interactions with objects or people, or for carrying out complex tasks. As an example, see the preliminary report of Wong et al on deriving semantic information from neural signals. Semantic information is the conceptual knowledge, or essentially the concept of a specific object or action.

At the IEEE Engineering in Medicine and Biology Conference in 2011, Wong et al presented an ECoG platform in a language task in which subjects named pictures of objects. These objects belonged to different semantic categories. As described previously, increased activity in gamma band frequencies (range of 60-120 Hz) corresponds to the temporal progression of speech production associated with simple language tasks. The authors contend that ” …we expect that our findings about semantic information decoding will inspire the development of semantic-based BCI as communication aids. Such systems may potentially serve as a natural, intuitive and fast interface for an individual with severe disability to communicate with others.”

BMI technology is generally associated with providing direct brain control of motor intent, and as such the most common applications have been about building a computer interfaces for patients with Lou Gehrig’s disease (Amyotrophic Lateral Sclerosis) or wheel chair control for paralyzed patients. However, a BMI that would provide speech or language capability would open up exciting new frontiers – such as a direct communication link for patients who have speech impediments, or silent communication channels for human-machine interactions.

For Further Reading

S. Kellis, K. Miller, K. Thomson, R. Brown, P. House and B. Greger, “Decoding spoken words using local field potentials recorded from the cortical surface,” J. Neural Eng. , doi:10.1088/1741-2560/7/5/056007, 2010. (also see, http://www.bioen.utah.edu/faculty/greger/ )

B. N. Pasley, S. V. David, N. Mesgarani, A. Flinker, S. A. Shamma, N. E. Crone, R. T. Knight, E. F. Chang, “Reconstructing speech from human auditory cortex,” PLoS Biol. 10(1): e1001251., doi:10.1371/journal.pbio.1001251, 2012. (also see, http://newscenter.berkeley.edu/2012/01/31/scientists-decode-brain-waves-to-eavesdrop-on-what-we-hear/ )

W. Wong, A. D. Degenhart, P. Sudre, D. A. Pomerleau, E. C. Tyler-Kabara, “Decoding semantic information from human Electrocorticographic (ECoG) signals,” Proc. IEEE Eng. Med. Biol. Conf. , Boston, MA, 2011.

Dr. Martin Kohn is Chief Medical Scientist for Care Delivery Systems in IBM Research. He is a leader in IBM's support for the transformation of healthcare, including development of personalized care, outcomes-based models and payment reform.

Dr. Martin Kohn is Chief Medical Scientist for Care Delivery Systems in IBM Research. He is a leader in IBM's support for the transformation of healthcare, including development of personalized care, outcomes-based models and payment reform.  Chalapathy Neti is currently the Director and Global Leader, Healthcare Transformation, at IBM Research. In this role he leads IBM's global research, spanning 9 worldwide labs, on information technology innovation for healthcare transformation.

Chalapathy Neti is currently the Director and Global Leader, Healthcare Transformation, at IBM Research. In this role he leads IBM's global research, spanning 9 worldwide labs, on information technology innovation for healthcare transformation.  Dr. Shahram Ebadollahi is the senior manager of the Healthcare Systems and Analytics Research at IBM T.J. Watson Research Center in New York. He and the team of scientists working with him conduct research in the broad area of health informatics.

Dr. Shahram Ebadollahi is the senior manager of the Healthcare Systems and Analytics Research at IBM T.J. Watson Research Center in New York. He and the team of scientists working with him conduct research in the broad area of health informatics.  David A. Ferrucci is an IBM Fellow and the Principal Investigator for the DeepQA Watson/ Jeopardy! project. He has been at the T. J. Watson Research Center since 1995, where he leads the Semantic Analysis and Integration Department.

David A. Ferrucci is an IBM Fellow and the Principal Investigator for the DeepQA Watson/ Jeopardy! project. He has been at the T. J. Watson Research Center since 1995, where he leads the Semantic Analysis and Integration Department.  Nitish V. Thakor is a Professor of Biomedical Engineering at Johns Hopkins University, Baltimore, USA, as well as the Director of the newly formed institute for neurotechnology, SiNAPSE, at the National University of Singapore.

Nitish V. Thakor is a Professor of Biomedical Engineering at Johns Hopkins University, Baltimore, USA, as well as the Director of the newly formed institute for neurotechnology, SiNAPSE, at the National University of Singapore.  Dr. Christopher Voigt is an Associate Professor in the Department of Biological Engineering at MIT, and is co-director of the Center for Integrative Synthetic Biology. Previously, he was a member of the faculty in the Department of Pharmaceutical Chemistry at University of California, San Francisco.

Dr. Christopher Voigt is an Associate Professor in the Department of Biological Engineering at MIT, and is co-director of the Center for Integrative Synthetic Biology. Previously, he was a member of the faculty in the Department of Pharmaceutical Chemistry at University of California, San Francisco.